31/08/2015

5182 views

Defining an Algorithm - Part 3

This is Part 3, the last instalment, on the Algorithms section at the beginning of the Basic Concepts chapter in the first volume of The Art of Computer Programming. In the previous article we covered Knuth's first example of an implementation of Euclid's algorithm. But, as Knuth points out, this system doesn't restrict defined computational methods to strictly effective ones, where there are a finite number of steps or where the steps can always be performed by a human being. As such, he formally defines a computational method using a variation on a generic Markov Algorithm that can be used for the same purpose whilst keeping the defined computational method effective.

It's worth mentioning at this point that this whole example makes significantly more sense if you know what a Markov Algorithm actually is. A Markov algorithm is a procedure for taking a string of letters and converting them to a different string of letters using an ordered list of rules. Each rule looks for a specific series of letters and replaces them with another. We start at the top of our list of rules and work our way down. If we find one that applies we use it to change some letters and move back to the top of the list of rules to go again. If we don't find one, we keep moving down the list until we either find one or get to the end of the list. If you reach the bottom of the list of rules without applying any, your process is finished. That's it. To appreciate this a little better, let's run over a very simple example that will help make Knuth's example feel familiar later. Take the following ordered list fo rules.

1 - "u" -> "do" 2 - "p" -> "wn" 3 - "up" -> "error"

Now, imagine we parse in the string of letters "up". Starting at the top of our list we check our first rule (if you find a "u", replace it with "do"), and it applies so we replace the "u" in "up" with "do" and our result is "dop". Now we start again, we check our first rule but there is no "u", so we move onto our second rule (if you find a "p", replace it with "wn") and it applies so we replace our "p" with "wn" and now we have the string "down". And we go again, we check our first rule and it doesn't apply, we check our second rule and it doesn't apply, and we check our third rule and it doesn't apply to our new string. We have got to the end of our list and applied no rules, so the process is over. We have converted the string "up" to the string "down".

1 - "up" 2 - "dop" 3 - "down"

Remember how I emphasised that the order of the rules mattered? Well, if we were to re-arrange our rules and swap rules 1 and 3 we would end up with the word "error" instead. Knuth's algorithm actually varies slightly from a traditional Markov algorithm, and we'll see how later. Now, let's get back to the book and see how Knuth plans to ensure our new algorithm definition is effective.

If we wish to restrict the notion of algorithm so that only elementary operations are involved, we can place restrictions on Q, I, Ω and f, for example as follows: Let A be a finite set of letters, and let A* be the set of all strings on A (the set of all ordered sequences x1x2...xn, where n ≥ 0 and xj is in A for 1 ≤ j ≤ n).

So we have a finite limited set of letters called A. And we have A* which contains all combinations of letters that can be made with letters from A. Each combination of letters in A* is an ordered sequence that is at least n long, but n ≥ 0 so A* must also include an empty combination with no letters at all. When Knuth says "xj is in A for 1 ≤ j ≤ n" what he means is that any letter(xj) in each combination in A* is a letter from our set A.

The idea is to encode the states of the computation so that they are represented by strings of A*.

What this means is that each string of letters in A* represents a state in Q, and so every state of our computation (input, output and everything between) can be represented by a string in A*. You may even be able to draw a comparison to the Markov algorithm we defined earlier at this point. Markov algorithms, as we said earlier, have inputs that are strings, they work on strings, and then output another string.

Now let N be a nonnegative integer and let Q be the set of all (σ,j), where σ is in A* and j is an integer, 0 ≤ j ≤ N; let I be the subset of Q with j = 0 and let Ω be the subset with j = N.

Now if you know in advanced that Knuth is working towards a Malkov algorithm and you understand the snippet above then we are finally starting to see a glimpse of the bigger picture. Q is the set of all pair (σ,j) where σ is one of the strings in A* and j represents the step (or the state) in the algorithm. When j = 0 it is our input (our first step), and when j = N it is our output (our final step). If you got back to our example algorithm then our input "up" would look like this ("up",0) and our output would look like this ("down",3).

If θ and σ are strings in A*, we say that θ occurs in σ if it has the form αθω for strings α and ω.

Here, Knuth is simply defining what we mean when we say that one string is in another. It's a fairly intuitive idea that one string is in another if it exists in the middle with strings either side. For example the string "rap" is in "trapped" with the strings "t" and "ped" either side. At this point it is worth mentioning that a string in A* can have a length of 0. As such you can also say that the string "up" is in the string "up" with two empty strings either side.

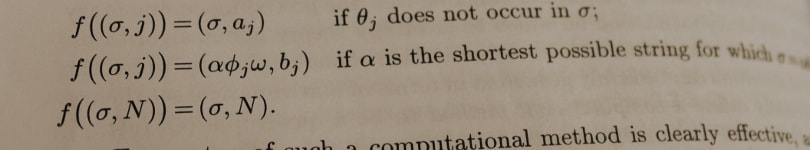

To complete our definition, let f be a function of the following type, defined by the strings θj, φj and the integers aj, bj for 0 ≤ j ≤ N:

f((σ,j)) = (σ,aj) if θj does not occur in σ

f((σ,j)) = (αφjω,bj) if α is the shortest string for which σ = αθjω

f((σ,N)) = (σ,N)

And this is where everything comes together. Remembering that j is the number of the step we are performing, we can define strings for θ & φ for each step (θj & φj) as well as integers a & b for each step that represent the step to move to next depending on the circumstances. The three function calls above are actually the rules we talked about in our Markov algorithm, so you try to apply the first function but if θj does occur, move down to the next function etc. So now we have a complex Markov algorithm with rules to fall through, but also steps defined with different strings to check based on j. No amount of explaining though will help as much as going through an example. Let's take our "up" example. First, lets declare our θ, φ, a & b for each step j.

| j - | θ | φ | a | b |

|---|---|---|---|---|

| 0 - | "u" | "do" | 2 | 1 |

| 1 - | "p" | "wn" | 2 | 3 |

| 2 - | "*" | "error" | 3 | 3 |

Now let's walk through this. We have our input "up" and we have our steps (j) defined. Look to the table above to see how we've defined the strings and integers θ, φ, a & b respectively for each step. We also have the ordered rules for our Markov algorithm as defined by Knuth. We start with the state ("up",0), and we check the first rule. Our first rule does not apply because σ ("up") does contain θ0 ("u"). We move down to our second rule which applies. We do have a string αθ0ω. α is an empty string θ0 is "u" and ω is "p". So we set our state as (αφjω,bj), in other words we replace the "u" with "do" and set our step as bj ( 1 ). Now we start again. We have our state ("dop", 1) and we check our first rule which does not apply because "dop" includes θ1 ("p"). The second rule applies and we do the same again and make our state (αφjω,bj), which this time is ("down", 3). And we're done.

If our input string did not contain "u" at the beginning our first rule would have applied and taken us directly to step 2, which would have replaced the whole string with "error". And that's it for the algorithms section! At the end, after the text, there are a set of questions. Question 8 asks you to create a number of steps and values for θ, φ, a & b for each step in order to implement Euclid's algorithm. Hopefully that shouldn't be too difficult for you now.

That's it for me until next time. I hope this was helpful, and as always if you have any questions or clarifications feel free to drop me a comment below. Until next time, happy reading!